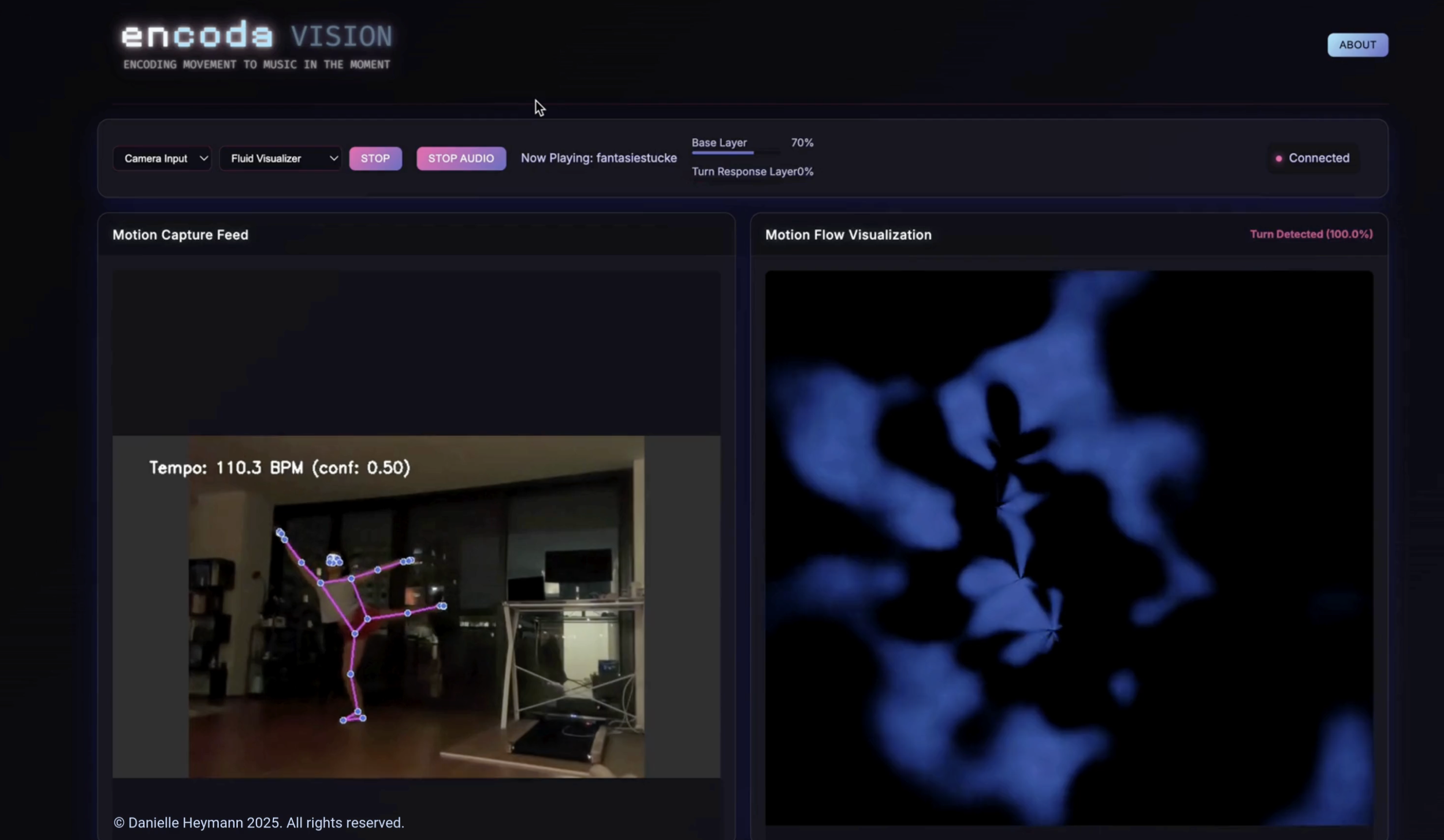

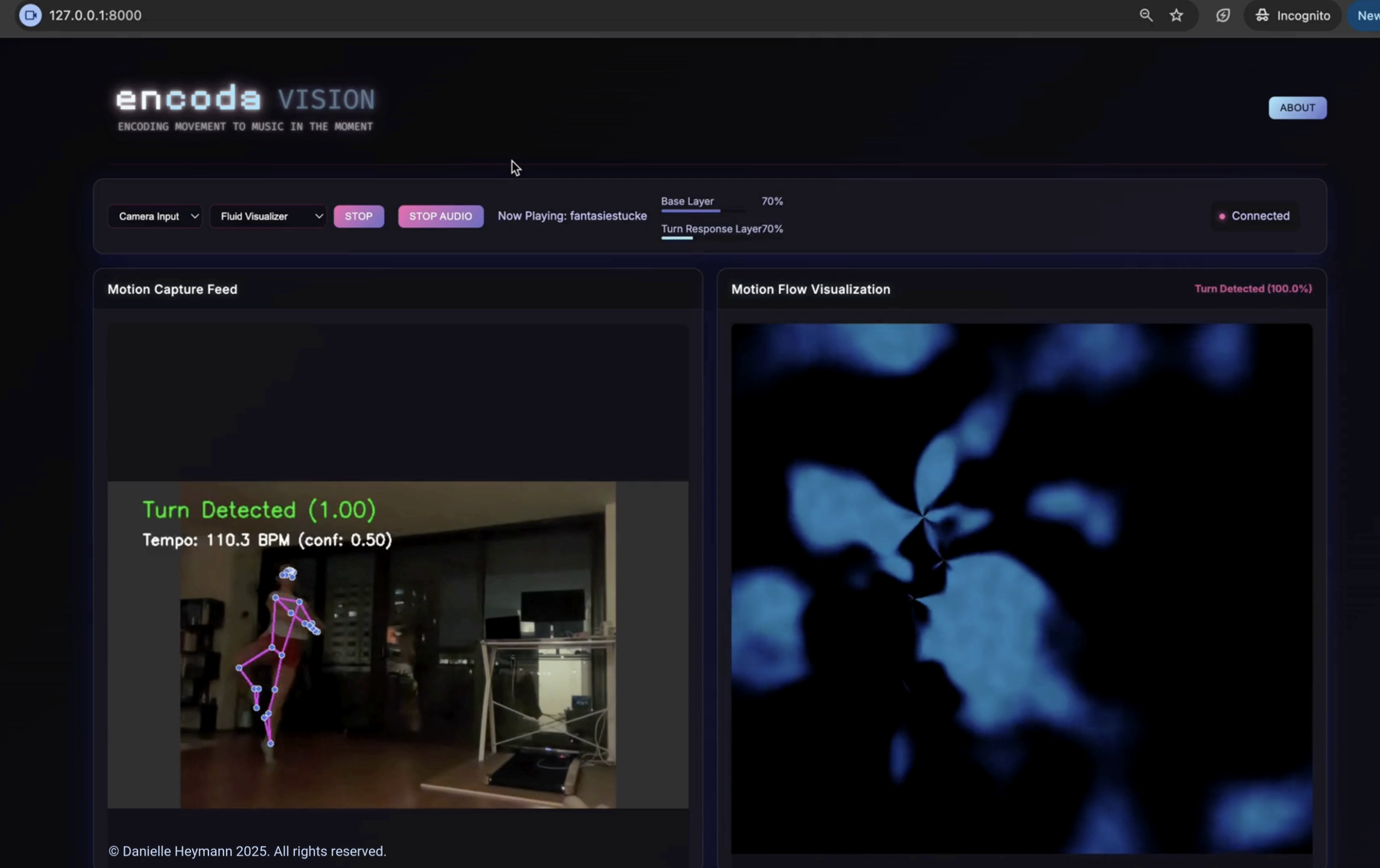

Encoda VISION is an innovative app developed to empower dancers to control music through movement. This interactive system transforms dance into a musical interface, giving performers the ability to shape sound through their physical expression.

How It Works

Turning movements add instrument layers (like the cello in the initial demo)

Floor contact transitions between different tracks

Movement patterns shape abstract visualizations

Dance dynamics automatically adjust the tempo of the music

The result is a seamless conversation between the body and music, where dancers gain creative control over the sonic environment they perform within.

Technical Implementation

Built from scratch using AI-driven computer vision models, encoda VISION tracks and interprets movement in real-time without limiting the dancer's freedom of expression. The system enhances rather than constrains artistic possibilities.

Creative Philosophy

As both a computer scientist/engineer and an artist, I created encoda VISION to bridge these two worlds. While it may not solve global challenges, it explores the frontier of what's possible when technology and artistic expression merge.

Vision & Collaboration

Encoda VISION represents my ongoing exploration of the intersection between art and technology. The project continues to evolve, and I'm open to collaborations with dancers, musicians, and other artists interested in exploring this new creative medium.

Demo

Encoda Vision: Motion-Controlled Music

Watch as movement transforms into music with encoda VISION, my custom-built computer vision application that lets dancers control sound through their movements.

In this demo, turning movements activate instrument layers (listen for the cello entering), floor contact changes tracks, and the dancer's movements dynamically adjust tempo while generating responsive visualizations.

The system can be fully customized with different movement keys and activations — choreographers and performers can define which gestures trigger specific musical elements, when new layers appear, or how tracks transition. This flexibility allows for personalized performance experiences tailored to different movement styles and artistic visions.

Built from scratch using AI-driven computer vision, Encoda Vision gives dancers creative control over the soundscape they perform within. While I'm not a professional ballet dancer, this demo showcases how the technology responds to movement in real-time.

Music:

Pastorale in C minor - Jacques Loussier Trio

Schumann: Cello Concerto, Adagio & Allegro, Fantasiestücke - Yo-Yo Ma, Sir Colin Davis, Bavarian Radio Symphony Orchestra & Emanuel Ax